- Home

- Splunk

- Splunk Enterprise Certified Architect

- SPLK-2002

- SPLK-2002 - Splunk Enterprise Certified Architect

Splunk SPLK-2002 Splunk Enterprise Certified Architect Exam Practice Test

Splunk Enterprise Certified Architect Questions and Answers

Which two sections can be expanded using the Search Job Inspector?

Options:

Execution costs.

Saved search history.

Search job properties.

Optimization suggestions.

Answer:

C, DExplanation:

The Search Job Inspector can be used to expand the following sections: Search job properties and Optimization suggestions. The Search Job Inspector is a tool that provides detailed information about a search job, such as the search parameters, the search statistics, the search timeline, and the search log. The Search Job Inspector can be accessed by clicking the Job menu in the Search bar and selecting Inspect Job. The Search Job Inspector has several sections that can be expanded or collapsed by clicking the arrow icon next to the section name. The Search job properties section shows the basic information about the search job, such as the SID, the status, the duration, the disk usage, and the scan count. The Optimization suggestions section shows the suggestions for improving the search performance, such as using transforming commands, filtering events, or reducing fields. The Execution costs and Saved search history sections are not part of the Search Job Inspector, and they cannot be expanded. The Execution costs section is part of the Search Dashboard, which shows the relative costs of each search component, such as commands, lookups, or subsearches. The Saved search history section is part of the Saved Searches page, which shows the history of the saved searches that have been run by the user or by a schedule

(Which of the following data sources are used for the Monitoring Console dashboards?)

Options:

REST API calls

Splunk btool

Splunk diag

metrics.log

Answer:

A, DExplanation:

According to Splunk Enterprise documentation for the Monitoring Console (MC), the data displayed in its dashboards is sourced primarily from two internal mechanisms — REST API calls and metrics.log.

The Monitoring Console (formerly known as the Distributed Management Console, or DMC) uses REST API endpoints to collect system-level information from all connected instances, such as indexer clustering status, license usage, and search head performance. These REST calls pull real-time configuration and performance data from Splunk’s internal management layer (/services/server/status, /services/licenser, /services/cluster/peers, etc.).

Additionally, the metrics.log file is one of the main data sources used by the Monitoring Console. This log records Splunk’s internal performance metrics, including pipeline latency, queue sizes, indexing throughput, CPU usage, and memory statistics. Dashboards like “Indexer Performance,” “Search Performance,” and “Resource Usage” are powered by searches over the _internal index that reference this log.

Other tools listed — such as btool (configuration troubleshooting utility) and diag (diagnostic archive generator) — are not used as runtime data sources for Monitoring Console dashboards. They assist in troubleshooting but are not actively queried by the MC.

References (Splunk Enterprise Documentation):

• Monitoring Console Overview – Data Sources and Architecture

• metrics.log Reference – Internal Performance Data Collection

• REST API Usage in Monitoring Console

• Distributed Management Console Configuration Guide

(Which command is used to initially add a search head to a single-site indexer cluster?)

Options:

splunk edit cluster-config -mode searchhead -manager_uri https://10.0.0.1:8089 -secret changeme

splunk edit cluster-config -mode peer -manager_uri https://10.0.0.1:8089 -secret changeme

splunk add cluster-manager -manager_uri https://10.0.0.1:8089 -secret changeme

splunk add cluster-manager -mode searchhead -manager_uri https://10.0.0.1:8089 -secret changeme

Answer:

AExplanation:

According to Splunk Enterprise Distributed Clustering documentation, when you add a search head to an indexer cluster, you must configure it to communicate with the Cluster Manager (previously known as Master Node). The proper way to initialize this connection is by editing the cluster configuration using the splunk edit cluster-config command.

The correct syntax for a search head is:

splunk edit cluster-config -mode searchhead -manager_uri https://

Here:

-mode searchhead specifies that this node will function as a search head that participates in distributed search across the indexer cluster.

-manager_uri provides the management URI of the cluster manager.

-secret defines the shared secret key used for secure communication between the manager and cluster members.

Once this configuration is applied, the search head must be restarted for the changes to take effect.

Using -mode peer (Option B) is for indexers joining the cluster, not search heads. The add cluster-manager command (Options C and D) is not a valid Splunk command.

References (Splunk Enterprise Documentation):

• Configure the Search Head for an Indexer Cluster

• Indexer Clustering: Configure the Cluster Manager, Peer, and Search Head Nodes

• Splunk Enterprise Admin Manual: splunk edit cluster-config Command Reference

What is needed to ensure that high-velocity sources will not have forwarding delays to the indexers?

Options:

Increase the default value of sessionTimeout in server, conf.

Increase the default limit for maxKBps in limits.conf.

Decrease the value of forceTimebasedAutoLB in outputs. conf.

Decrease the default value of phoneHomelntervallnSecs in deploymentclient .conf.

Answer:

BExplanation:

To ensure that high-velocity sources will not have forwarding delays to the indexers, the default limit for maxKBps in limits.conf should be increased. This parameter controls the maximum bandwidth that a forwarder can use to send data to the indexers. By default, it is set to 256 KBps, which may not be sufficient for high-volume data sources. Increasing this limit can reduce the forwarding latency and improve the performance of the forwarders. However, this should be done with caution, as it may affect the network bandwidth and the indexer load. Option B is the correct answer. Option A is incorrect because the sessionTimeout parameter in server.conf controls the duration of a TCP connection between a forwarder and an indexer, not the bandwidth limit. Option C is incorrect because the forceTimebasedAutoLB parameter in outputs.conf controls the frequency of load balancing among the indexers, not the bandwidth limit. Option D is incorrect because the phoneHomelntervallnSecs parameter in deploymentclient.conf controls the interval at which a forwarder contacts the deployment server, not the bandwidth limit12

1: https://docs.splunk.com/Documentation/Splunk/9.1.2/Admin/Limitsconf#limits.conf.spec 2: https://docs.splunk.com/Documentation/Splunk/9.1.2/Forwarding/Routeandfilterdatad#Set_the_maximum_bandwidth_usage_for_a_forwarder

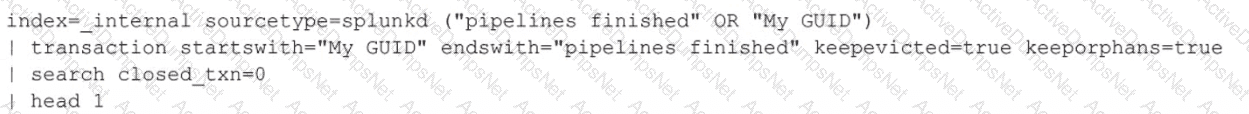

A Splunk instance has crashed, but no crash log was generated. There is an attempt to determine what user activity caused the crash by running the following search:

What does searching for closed_txn=0 do in this search?

Options:

Filters results to situations where Splunk was started and stopped multiple times.

Filters results to situations where Splunk was started and stopped once.

Filters results to situations where Splunk was stopped and then immediately restarted.

Filters results to situations where Splunk was started, but not stopped.

Answer:

DExplanation:

Searching for closed_txn=0 in this search filters results to situations where Splunk was started, but not stopped. This means that the transaction was not completed, and Splunk crashed before it could finish the pipelines. The closed_txn field is added by the transaction command, and it indicates whether the transaction was closed by an event that matches the endswith condition1. A value of 0 means that the transaction was not closed, and a value of 1 means that the transaction was closed1. Therefore, option D is the correct answer, and options A, B, and C are incorrect.

1: transaction command overview

When Splunk indexes data in a non-clustered environment, what kind of files does it create by default?

Options:

Index and .tsidx files.

Rawdata and index files.

Compressed and .tsidx files.

Compressed and meta data files.

Answer:

AExplanation:

When Splunk indexes data in a non-clustered environment, it creates index and .tsidx files by default. The index files contain the raw data that Splunk has ingested, compressed and encrypted. The .tsidx files contain the time-series index that maps the timestamps and event IDs of the raw data. The rawdata and index files are not the correct terms for the files that Splunk creates. The compressed and .tsidx files are partially correct, but compressed is not the proper name for the index files. The compressed and meta data files are also partially correct, but meta data is not the proper name for the .tsidx files.

What is the minimum reference server specification for a Splunk indexer?

Options:

12 CPU cores, 12GB RAM, 800 IOPS

16 CPU cores, 16GB RAM, 800 IOPS

24 CPU cores, 16GB RAM, 1200 IOPS

28 CPU cores, 32GB RAM, 1200 IOPS

Answer:

AExplanation:

The minimum reference server specification for a Splunk indexer is 12 CPU cores, 12GB RAM, and 800 IOPS. This specification is based on the assumption that the indexer will handle an average indexing volume of 100GB per day, with a peak of 300GB per day, and a typical search load of 1 concurrent search per 1GB of indexing volume. The other specifications are either higher or lower than the minimum requirement. For more information, see [Reference hardware] in the Splunk documentation.

A Splunk user successfully extracted an ip address into a field called src_ip. Their colleague cannot see that field in their search results with events known to have src_ip. Which of the following may explain the problem? (Select all that apply.)

Options:

The field was extracted as a private knowledge object.

The events are tagged as communicate, but are missing the network tag.

The Typing Queue, which does regular expression replacements, is blocked.

The colleague did not explicitly use the field in the search and the search was set to Fast Mode.

Answer:

A, DExplanation:

The following may explain the problem of why a colleague cannot see the src_ip field in their search results: The field was extracted as a private knowledge object, and the colleague did not explicitly use the field in the search and the search was set to Fast Mode. A knowledge object is a Splunk entity that applies some knowledge or intelligence to the data, such as a field extraction, a lookup, or a macro. A knowledge object can have different permissions, such as private, app, or global. A private knowledge object is only visible to the user who created it, and it cannot be shared with other users. A field extraction is a type of knowledge object that extracts fields from the raw data at index time or search time. If a field extraction is created as a private knowledge object, then only the user who created it can see the extracted field in their search results. A search mode is a setting that determines how Splunk processes and displays the search results, such as Fast, Smart, or Verbose. Fast mode is the fastest and most efficient search mode, but it also limits the number of fields and events that are displayed. Fast mode only shows the default fields, such as _time, host, source, sourcetype, and _raw, and any fields that are explicitly used in the search. If a field is not used in the search and it is not a default field, then it will not be shown in Fast mode. The events are tagged as communicate, but are missing the network tag, and the Typing Queue, which does regular expression replacements, is blocked, are not valid explanations for the problem. Tags are labels that can be applied to fields or field values to make them easier to search. Tags do not affect the visibility of fields, unless they are used as filters in the search. The Typing Queue is a component of the Splunk data pipeline that performs regular expression replacements on the data, such as replacing IP addresses with host names. The Typing Queue does not affect the field extraction process, unless it is configured to do so

A customer is migrating 500 Universal Forwarders from an old deployment server to a new deployment server, with a different DNS name. The new deployment server is configured and running.

The old deployment server deployed an app containing an updated deploymentclient.conf file to all forwarders, pointing them to the new deployment server. The app was successfully deployed to all 500 forwarders.

Why would all of the forwarders still be phoning home to the old deployment server?

Options:

There is a version mismatch between the forwarders and the new deployment server.

The new deployment server is not accepting connections from the forwarders.

The forwarders are configured to use the old deployment server in $SPLUNK_HOME/etc/system/local.

The pass4SymmKey is the same on the new deployment server and the forwarders.

Answer:

CExplanation:

All of the forwarders would still be phoning home to the old deployment server, because the forwarders are configured to use the old deployment server in $SPLUNK_HOME/etc/system/local. This is the local configuration directory that contains the settings that override the default settings in $SPLUNK_HOME/etc/system/default. The deploymentclient.conf file in the local directory specifies the targetUri of the deployment server that the forwarder contacts for configuration updates and apps. If the forwarders have the old deployment server’s targetUri in the local directory, they will ignore the updated deploymentclient.conf file that was deployed by the old deployment server, because the local settings have higher precedence than the deployed settings. To fix this issue, the forwarders should either remove the deploymentclient.conf file from the local directory, or update it with the new deployment server’s targetUri. Option C is the correct answer. Option A is incorrect because a version mismatch between the forwarders and the new deployment server would not prevent the forwarders from phoning home to the new deployment server, as long as they are compatible versions. Option B is incorrect because the new deployment server is configured and running, and there is no indication that it is not accepting connections from the forwarders. Option D is incorrect because the pass4SymmKey is the shared secret key that the deployment server and the forwarders use to authenticate each other. It does not affect the forwarders’ ability to phone home to the new deployment server, as long as it is the same on both sides12

1: https://docs.splunk.com/Documentation/Splunk/9.1.2/Updating/Configuredeploymentclients 2: https://docs.splunk.com/Documentation/Splunk/9.1.2/Admin/Wheretofindtheconfigurationfiles

What does setting site=site0 on all Search Head Cluster members do in a multi-site indexer cluster?

Options:

Disables search site affinity.

Sets all members to dynamic captaincy.

Enables multisite search artifact replication.

Enables automatic search site affinity discovery.

Answer:

AExplanation:

Setting site=site0 on all Search Head Cluster members disables search site affinity. Search site affinity is a feature that allows search heads to preferentially search the peer nodes that are in the same site as the search head, to reduce network latency and bandwidth consumption. By setting site=site0, which is a special value that indicates no site, the search heads will search all peer nodes regardless of their site. Setting site=site0 does not set all members to dynamic captaincy, enable multisite search artifact replication, or enable automatic search site affinity discovery. Dynamic captaincy is a feature that allows any member to become the captain, and it is enabled by default. Multisite search artifact replication is a feature that allows search artifacts to be replicated across sites, and it is enabled by setting site_replication_factor to a value greater than 1. Automatic search site affinity discovery is a feature that allows search heads to automatically determine their site based on the network latency to the peer nodes, and it is enabled by setting site=auto

Consider a use case involving firewall data. There is no Splunk-supported Technical Add-On, but the vendor has built one. What are the items that must be evaluated before installing the add-on? (Select all that apply.)

Options:

Identify number of scheduled or real-time searches.

Validate if this Technical Add-On enables event data for a data model.

Identify the maximum number of forwarders Technical Add-On can support.

Verify if Technical Add-On needs to be installed onto both a search head or indexer.

Answer:

A, BExplanation:

A Technical Add-On (TA) is a Splunk app that contains configurations for data collection, parsing, and enrichment. It can also enable event data for a data model, which is useful for creating dashboards and reports. Therefore, before installing a TA, it is important to identify the number of scheduled or real-time searches that will use the data model, and to validate if the TA enables event data for a data model. The number of forwarders that the TA can support is not relevant, as the TA is installed on the indexer or search head, not on the forwarder. The installation location of the TA depends on the type of data and the use case, so it is not a fixed requirement

(It is possible to lose UI edit functionality after manually editing which of the following files in the deployment server?)

Options:

serverclass.conf

deploymentclient.conf

inputs.conf

deploymentserver.conf

Answer:

AExplanation:

In Splunk Enterprise, manually editing the serverclass.conf file on a Deployment Server can lead to the loss of UI edit functionality for server classes in Splunk Web.

The Deployment Server manages app distribution to Universal Forwarders and other deployment clients through server classes, which are defined in serverclass.conf. This file maps deployment clients to specific app configurations and defines filtering rules, restart behaviors, and inclusion/exclusion criteria.

When this configuration file is modified manually (outside of Splunk Web), the syntax, formatting, or logical relationships between entries may not match what Splunk Web expects. As a result, Splunk Web may no longer be able to parse or display those server classes correctly. Once this happens, administrators cannot modify deployment settings through the GUI until the configuration file is corrected or reverted to a valid state.

Other files such as deploymentclient.conf, inputs.conf, and deploymentserver.conf control client settings, data inputs, and core server parameters but do not affect the UI-driven deployment management functionality.

Therefore, Splunk explicitly warns administrators in its Deployment Server documentation to use Splunk Web or the CLI when modifying serverclass.conf, and to avoid manual editing unless fully confident in its syntax.

References (Splunk Enterprise Documentation):

• Deployment Server Overview – Managing Server Classes and App Deployment

• serverclass.conf Reference and Configuration Best Practices

• Splunk Enterprise Admin Manual – GUI Limitations After Manual Edits

• Troubleshooting Deployment Server and Serverclass Configuration Issues

A new Splunk customer is using syslog to collect data from their network devices on port 514. What is the best practice for ingesting this data into Splunk?

Options:

Configure syslog to send the data to multiple Splunk indexers.

Use a Splunk indexer to collect a network input on port 514 directly.

Use a Splunk forwarder to collect the input on port 514 and forward the data.

Configure syslog to write logs and use a Splunk forwarder to collect the logs.

Answer:

DExplanation:

The best practice for ingesting syslog data from network devices on port 514 into Splunk is to configure syslog to write logs and use a Splunk forwarder to collect the logs. This practice will ensure that the data is reliably collected and forwarded to Splunk, without losing any data or overloading the Splunk indexer. Configuring syslog to send the data to multiple Splunk indexers will not guarantee data reliability, as syslog is a UDP protocol that does not provide acknowledgment or delivery confirmation. Using a Splunk indexer to collect a network input on port 514 directly will not provide data reliability or load balancing, as the indexer may not be able to handle the incoming data volume or distribute it to other indexers. Using a Splunk forwarder to collect the input on port 514 and forward the data will not provide data reliability, as the forwarder may not be able to receive the data from syslog or buffer it in case of network issues. For more information, see [Get data from TCP and UDP ports] and [Best practices for syslog data] in the Splunk documentation.

(Which index does Splunk use to record user activities?)

Options:

_internal

_audit

_kvstore

_telemetry

Answer:

BExplanation:

Splunk Enterprise uses the _audit index to log and store all user activity and audit-related information. This includes details such as user logins, searches executed, configuration changes, role modifications, and app management actions.

The _audit index is populated by data collected from the Splunkd audit logger and records actions performed through both Splunk Web and the CLI. Each event in this index typically includes fields like user, action, info, search_id, and timestamp, allowing administrators to track activity across all Splunk users and components for security, compliance, and accountability purposes.

The _internal index, by contrast, contains operational logs such as metrics.log and scheduler.log used for system performance and health monitoring. _kvstore stores internal KV Store metadata, and _telemetry is used for optional usage data reporting to Splunk.

The _audit index is thus the authoritative source for user behavior monitoring within Splunk environments and is a key component of compliance and security auditing.

References (Splunk Enterprise Documentation):

• Audit Logs and the _audit Index – Monitoring User Activity

• Splunk Enterprise Security and Compliance: Tracking User Actions

• Splunk Admin Manual – Overview of Internal Indexes (_internal, _audit, _introspection)

• Splunk Audit Logging and User Access Monitoring

How does the average run time of all searches relate to the available CPU cores on the indexers?

Options:

Average run time is independent of the number of CPU cores on the indexers.

Average run time decreases as the number of CPU cores on the indexers decreases.

Average run time increases as the number of CPU cores on the indexers decreases.

Average run time increases as the number of CPU cores on the indexers increases.

Answer:

CExplanation:

The average run time of all searches increases as the number of CPU cores on the indexers decreases. The CPU cores are the processing units that execute the instructions and calculations for the data. The number of CPU cores on the indexers affects the search performance, because the indexers are responsible for retrieving and filtering the data from the indexes. The more CPU cores the indexers have, the faster they can process the data and return the results. The less CPU cores the indexers have, the slower they can process the data and return the results. Therefore, the average run time of all searches is inversely proportional to the number of CPU cores on the indexers. The average run time of all searches is not independent of the number of CPU cores on the indexers, because the CPU cores are an important factor for the search performance. The average run time of all searches does not decrease as the number of CPU cores on the indexers decreases, because this would imply that the search performance improves with less CPU cores, which is not true. The average run time of all searches does not increase as the number of CPU cores on the indexers increases, because this would imply that the search performance worsens with more CPU cores, which is not true

A search head cluster with a KV store collection can be updated from where in the KV store collection?

Options:

The search head cluster captain.

The KV store primary search head.

Any search head except the captain.

Any search head in the cluster.

Answer:

DExplanation:

According to the Splunk documentation1, any search head in the cluster can update the KV store collection. The KV store collection is replicated across all the cluster members, and any write operation is delegated to the KV store captain, who then synchronizes the changes with the other members. The KV store primary search head is not a valid term, as there is no such role in a search head cluster. The other options are false because:

The search head cluster captain is not the only node that can update the KV store collection, as any member can initiate a write operation1.

Any search head except the captain can also update the KV store collection, as the write operation will be delegated to the captain1.

(What are the possible values for the mode attribute in server.conf for a Splunk server in the [clustering] stanza?)

Options:

[clustering] mode = peer

[clustering] mode = searchhead

[clustering] mode = deployer

[clustering] mode = manager

Answer:

A, B, DExplanation:

Within the [clustering] stanza of the server.conf file, the mode attribute defines the functional role of a Splunk instance within an indexer cluster. Splunk documentation identifies three valid modes:

mode = manager

Defines the node as the Cluster Manager (formerly called the Master Node).

Responsible for coordinating peer replication, managing configurations, and ensuring data integrity across indexers.

mode = peer

Defines the node as an Indexer (Peer Node) within the cluster.

Handles data ingestion, replication, and search operations under the control of the manager node.

mode = searchhead

Defines a Search Head that connects to the cluster for distributed searching and data retrieval.

The value “deployer” (Option C) is not valid within the [clustering] stanza; it applies to Search Head Clustering (SHC) configurations, where it is defined separately in server.conf under [shclustering].

Each mode must be accompanied by other critical attributes such as manager_uri, replication_port, and pass4SymmKey to enable proper communication and security between cluster members.

References (Splunk Enterprise Documentation):

• Indexer Clustering: Configure Manager, Peer, and Search Head Modes

• server.conf Reference – [clustering] Stanza Attributes

• Distributed Search and Cluster Node Role Configuration

• Splunk Enterprise Admin Manual – Cluster Deployment Architecture

Which of the following is a best practice to maximize indexing performance?

Options:

Use automatic source typing.

Use the Splunk default settings.

Not use pre-trained source types.

Minimize configuration generality.

Answer:

DExplanation:

A best practice to maximize indexing performance is to minimize configuration generality. Configuration generality refers to the use of generic or default settings for data inputs, such as source type, host, index, and timestamp. Minimizing configuration generality means using specific and accurate settings for each data input, which can reduce the processing overhead and improve the indexing throughput. Using automatic source typing, using the Splunk default settings, and not using pre-trained source types are examples of configuration generality, which can negatively affect the indexing performance

A single-site indexer cluster has a replication factor of 3, and a search factor of 2. What is true about this cluster?

Options:

The cluster will ensure there are at least two copies of each bucket, and at least three copies of searchable metadata.

The cluster will ensure there are at most three copies of each bucket, and at most two copies of searchable metadata.

The cluster will ensure only two search heads are allowed to access the bucket at the same time.

The cluster will ensure there are at least three copies of each bucket, and at least two copies of searchable metadata.

Answer:

DExplanation:

A single-site indexer cluster is a group of Splunk Enterprise instances that index and replicate data across the cluster1. A bucket is a directory that contains indexed data, along with metadata and other information2. A replication factor is the number of copies of each bucket that the cluster maintains1. A search factor is the number of searchable copies of each bucket that the cluster maintains1. A searchable copy is a copy that contains both the raw data and the index files3. A search head is a Splunk Enterprise instance that coordinates the search activities across the peer nodes1.

Option D is the correct answer because it reflects the definitions of replication factor and search factor. The cluster will ensure that there are at least three copies of each bucket, one on each peer node, to satisfy the replication factor of 3. The cluster will also ensure that there are at least two searchable copies of each bucket, one primary and one searchable, to satisfy the search factor of 2. The primary copy is the one that the search head uses to run searches, and the searchable copy is the one that can be promoted to primary if the original primary copy becomes unavailable3.

Option A is incorrect because it confuses the replication factor and the search factor. The cluster will ensure there are at least three copies of each bucket, not two, to meet the replication factor of 3. The cluster will ensure there are at least two copies of searchable metadata, not three, to meet the search factor of 2.

Option B is incorrect because it uses the wrong terms. The cluster will ensure there are at least, not at most, three copies of each bucket, to meet the replication factor of 3. The cluster will ensure there are at least, not at most, two copies of searchable metadata, to meet the search factor of 2.

Option C is incorrect because it has nothing to do with the replication factor or the search factor. The cluster does not limit the number of search heads that can access the bucket at the same time. The search head can search across multiple clusters, and the cluster can serve multiple search heads1.

1: The basics of indexer cluster architecture - Splunk Documentation 2: About buckets - Splunk Documentation 3: Search factor - Splunk Documentation

Why should intermediate forwarders be avoided when possible?

Options:

To minimize license usage and cost.

To decrease mean time between failures.

Because intermediate forwarders cannot be managed by a deployment server.

To eliminate potential performance bottlenecks.

Answer:

DExplanation:

Intermediate forwarders are forwarders that receive data from other forwarders and then send that data to indexers. They can be useful in some scenarios, such as when network bandwidth or security constraints prevent direct forwarding to indexers, or when data needs to be routed, cloned, or modified in transit. However, intermediate forwarders also introduce additional complexity and overhead to the data pipeline, which can affect the performance and reliability of data ingestion. Therefore, intermediate forwarders should be avoided when possible, and used only when there is a clear benefit or requirement for them. Some of the drawbacks of intermediate forwarders are:

They increase the number of hops and connections in the data flow, which can introduce latency and increase the risk of data loss or corruption.

They consume more resources on the hosts where they run, such as CPU, memory, disk, and network bandwidth, which can affect the performance of other applications or processes on those hosts.

They require additional configuration and maintenance, such as setting up inputs, outputs, load balancing, security, monitoring, and troubleshooting.

They can create data duplication or inconsistency if they are not configured properly, such as when using cloning or routing rules.

Some of the references that support this answer are:

Configure an intermediate forwarder, which states: “Intermediate forwarding is where a forwarder receives data from one or more forwarders and then sends that data on to another indexer. This kind of setup is useful when, for example, you have many hosts in different geographical regions and you want to send data from those forwarders to a central host in that region before forwarding the data to an indexer. All forwarder types can act as an intermediate forwarder. However, this adds complexity to your deployment and can affect performance, so use it only when necessary.”

Intermediate data routing using universal and heavy forwarders, which states: “This document outlines a variety of Splunk options for routing data that address both technical and business requirements. Overall benefits Using splunkd intermediate data routing offers the following overall benefits: … The routing strategies described in this document enable flexibility for reliably processing data at scale. Intermediate routing enables better security in event-level data as well as in transit. The following is a list of use cases and enablers for splunkd intermediate data routing: … Limitations splunkd intermediate data routing has the following limitations: … Increased complexity and resource consumption. splunkd intermediate data routing adds complexity to the data pipeline and consumes resources on the hosts where it runs. This can affect the performance and reliability of data ingestion and other applications or processes on those hosts. Therefore, intermediate routing should be avoided when possible, and used only when there is a clear benefit or requirement for it.”

Use forwarders to get data into Splunk Enterprise, which states: “The forwarders take the Apache data and send it to your Splunk Enterprise deployment for indexing, which consolidates, stores, and makes the data available for searching. Because of their reduced resource footprint, forwarders have a minimal performance impact on the Apache servers. … Note: You can also configure a forwarder to send data to another forwarder, which then sends the data to the indexer. This is called intermediate forwarding. However, this adds complexity to your deployment and can affect performance, so use it only when necessary.”

When should a dedicated deployment server be used?

Options:

When there are more than 50 search peers.

When there are more than 50 apps to deploy to deployment clients.

When there are more than 50 deployment clients.

When there are more than 50 server classes.

Answer:

CExplanation:

A dedicated deployment server is a Splunk instance that manages the distribution of configuration updates and apps to a set of deployment clients, such as forwarders, indexers, or search heads. A dedicated deployment server should be used when there are more than 50 deployment clients, because this number exceeds the recommended limit for a non-dedicated deployment server. A non-dedicated deployment server is a Splunk instance that also performs other roles, such as indexing or searching. Using a dedicated deployment server can improve the performance, scalability, and reliability of the deployment process. Option C is the correct answer. Option A is incorrect because the number of search peers does not affect the need for a dedicated deployment server. Search peers are indexers that participate in a distributed search. Option B is incorrect because the number of apps to deploy does not affect the need for a dedicated deployment server. Apps are packages of configurations and assets that provide specific functionality or views in Splunk. Option D is incorrect because the number of server classes does not affect the need for a dedicated deployment server. Server classes are logical groups of deployment clients that share the same configuration updates and apps12

1: https://docs.splunk.com/Documentation/Splunk/9.1.2/Updating/Aboutdeploymentserver 2: https://docs.splunk.com/Documentation/Splunk/9.1.2/Updating/Whentousedeploymentserver

(Which indexes.conf attribute would prevent an index from participating in an indexer cluster?)

Options:

available_sites = none

repFactor = 0

repFactor = auto

site_mappings = default_mapping

Answer:

BExplanation:

The repFactor (replication factor) attribute in the indexes.conf file determines whether an index participates in indexer clustering and how many copies of its data are replicated across peer nodes.

When repFactor is set to 0, it explicitly instructs Splunk to exclude that index from participating in the cluster replication and management process. This means:

The index is not replicated across peer nodes.

It will not be managed by the Cluster Manager.

It exists only locally on the indexer where it was created.

Such indexes are typically used for local-only storage, such as _internal, _audit, or other custom indexes that store diagnostic or node-specific data.

By contrast:

repFactor=auto allows the index to inherit the cluster-wide replication policy from the Cluster Manager.

available_sites and site_mappings relate to multisite configurations, controlling where copies of the data are stored, but they do not remove the index from clustering.

Setting repFactor=0 is the only officially supported way to create a non-clustered index within a clustered environment.

References (Splunk Enterprise Documentation):

• indexes.conf Reference – repFactor Attribute Explanation

• Managing Non-Clustered Indexes in Clustered Deployments

• Indexer Clustering: Index Participation and Replication Policies

• Splunk Enterprise Admin Manual – Local-Only and Clustered Index Configurations

If .delta replication fails during knowledge bundle replication, what is the fall-back method for Splunk?

Options:

.Restart splunkd.

.delta replication.

.bundle replication.

Restart mongod.

Answer:

CExplanation:

This is the fall-back method for Splunk if .delta replication fails during knowledge bundle replication. Knowledge bundle replication is the process of distributing the knowledge objects, such as lookups, macros, and field extractions, from the search head cluster to the indexer cluster1. Splunk uses two methods of knowledge bundle replication: .delta replication and .bundle replication1. .Delta replication is the default and preferred method, as it only replicates the changes or updates to the knowledge objects, which reduces the network traffic and disk space usage1. However, if .delta replication fails for some reason, such as corrupted files or network errors, Splunk automatically switches to .bundle replication, which replicates the entire knowledge bundle, regardless of the changes or updates1. This ensures that the knowledge objects are always synchronized between the search head cluster and the indexer cluster, but it also consumes more network bandwidth and disk space1. The other options are not valid fall-back methods for Splunk. Option A, restarting splunkd, is not a method of knowledge bundle replication, but a way to restart the Splunk daemon on a node2. This may or may not fix the .delta replication failure, but it does not guarantee the synchronization of the knowledge objects. Option B, .delta replication, is not a fall-back method, but the primary method of knowledge bundle replication, which is assumed to have failed in the question1. Option D, restarting mongod, is not a method of knowledge bundle replication, but a way to restart the MongoDB daemon on a node3. This is not related to the knowledge bundle replication, but to the KV store replication, which is a different process3. Therefore, option C is the correct answer, and options A, B, and D are incorrect.

1: How knowledge bundle replication works 2: Start and stop Splunk Enterprise 3: Restart the KV store

(When determining where a Splunk forwarder is trying to send data, which of the following searches can provide assistance?)

Options:

index=_internal sourcetype=internal metrics destHost | dedup destHost

index=_internal sourcetype=splunkd metrics inputHost | dedup inputHost

index=_metrics sourcetype=splunkd metrics destHost | dedup destHost

index=_internal sourcetype=splunkd metrics destHost | dedup destHost

Answer:

DExplanation:

To determine where a Splunk forwarder is attempting to send its data, administrators can search within the _internal index using the metrics logs generated by the forwarder’s Splunkd process. The correct and documented search is:

index=_internal sourcetype=splunkd metrics destHost | dedup destHost

The _internal index contains detailed operational logs from the Splunkd process, including metrics on network connections, indexing pipelines, and output groups. The field destHost records the destination indexer(s) to which the forwarder is attempting to send data. Using dedup destHost ensures that only unique destination hosts are shown.

This search is particularly useful for troubleshooting forwarding issues, such as connection failures, misconfigurations in outputs.conf, or load-balancing behavior in multi-indexer setups.

Other listed options are invalid or incorrect because:

sourcetype=internal does not exist.

index=_metrics is not where Splunk stores forwarding telemetry.

The field inputHost identifies the source host, not the destination.

Thus, Option D aligns with Splunk’s official troubleshooting practices for forwarder-to-indexer communication validation.

References (Splunk Enterprise Documentation):

• Monitoring Forwarder Connections and Destinations

• Troubleshooting Forwarding Using Internal Logs

• _internal Index Reference – Metrics and destHost Fields

• outputs.conf – Verifying Forwarder Data Routing and Connectivity

Which index-time props.conf attributes impact indexing performance? (Select all that apply.)

Options:

REPORT

LINE_BREAKER

ANNOTATE_PUNCT

SHOULD_LINEMERGE

Answer:

B, DExplanation:

The index-time props.conf attributes that impact indexing performance are LINE_BREAKER and SHOULD_LINEMERGE. These attributes determine how Splunk breaks the incoming data into events and whether it merges multiple events into one. These operations can affect the indexing speed and the disk space consumption. The REPORT attribute does not impact indexing performance, as it is used to apply transforms at search time. The ANNOTATE_PUNCT attribute does not impact indexing performance, as it is used to add punctuation metadata to events at search time. For more information, see [About props.conf and transforms.conf] in the Splunk documentation.

When adding or decommissioning a member from a Search Head Cluster (SHC), what is the proper order of operations?

Options:

1. Delete Splunk Enterprise, if it exists.2. Install and initialize the instance.3. Join the SHC.

1. Install and initialize the instance.2. Delete Splunk Enterprise, if it exists.3. Join the SHC.

1. Initialize cluster rebalance operation.2. Remove master node from cluster.3. Trigger replication.

1. Trigger replication.2. Remove master node from cluster.3. Initialize cluster rebalance operation.

Answer:

AExplanation:

When adding or decommissioning a member from a Search Head Cluster (SHC), the proper order of operations is:

Delete Splunk Enterprise, if it exists.

Install and initialize the instance.

Join the SHC.

This order of operations ensures that the member has a clean and consistent Splunk installation before joining the SHC. Deleting Splunk Enterprise removes any existing configurations and data from the instance. Installing and initializing the instance sets up the Splunk software and the required roles and settings for the SHC. Joining the SHC adds the instance to the cluster and synchronizes the configurations and apps with the other members. The other order of operations are not correct, because they either skip a step or perform the steps in the wrong order.

Which server.conf attribute should be added to the master node's server.conf file when decommissioning a site in an indexer cluster?

Options:

site_mappings

available_sites

site_search_factor

site_replication_factor

Answer:

AExplanation:

The site_mappings attribute should be added to the master node’s server.conf file when decommissioning a site in an indexer cluster. The site_mappings attribute is used to specify how the master node should reassign the buckets from the decommissioned site to the remaining sites. The site_mappings attribute is a comma-separated list of site pairs, where the first site is the decommissioned site and the second site is the destination site. For example, site_mappings = site1:site2,site3:site4 means that the buckets from site1 will be moved to site2, and the buckets from site3 will be moved to site4. The available_sites attribute is used to specify which sites are currently available in the cluster, and it is automatically updated by the master node. The site_search_factor and site_replication_factor attributes are used to specify the number of searchable and replicated copies of each bucket for each site, and they are not affected by the decommissioning process

Following Splunk recommendations, where could the Monitoring Console (MC) be installed in a distributed deployment with an indexer cluster, a search head cluster, and 1000 forwarders?

Options:

On a search peer in the cluster.

On the deployment server.

On the search head cluster deployer.

On a search head in the cluster.

Answer:

CExplanation:

The Monitoring Console (MC) is the Splunk Enterprise monitoring tool that lets you view detailed topology and performance information about your Splunk Enterprise deployment1. The MC can be installed on any Splunk Enterprise instance that can access the data from all the instances in the deployment2. However, following the Splunk recommendations, the MC should be installed on the search head cluster deployer, which is a dedicated instance that manages the configuration bundle for the search head cluster members3. This way, the MC can monitor the search head cluster as well as the indexer cluster and the forwarders, without affecting the performance or availability of the other instances4. The other options are not recommended because they either introduce additional load on the existing instances (such as A and D) or do not have access to the data from the search head cluster (such as B).

1: About the Monitoring Console - Splunk Documentation 2: Add Splunk Enterprise instances to the Monitoring Console 3: Configure the deployer - Splunk Documentation 4: [Monitoring Console setup and use - Splunk Documentation]

Which of the following is a problem that could be investigated using the Search Job Inspector?

Options:

Error messages are appearing underneath the search bar in Splunk Web.

Dashboard panels are showing "Waiting for queued job to start" on page load.

Different users are seeing different extracted fields from the same search.

Events are not being sorted in reverse chronological order.

Answer:

AExplanation:

According to the Splunk documentation1, the Search Job Inspector is a tool that you can use to troubleshoot search performance and understand the behavior of knowledge objects, such as event types, tags, lookups, and so on, within the search. You can inspect search jobs that are currently running or that have finished recently. The Search Job Inspector can help you investigate error messages that appear underneath the search bar in Splunk Web, as it can show you the details of the search job, such as the search string, the search mode, the search timeline, the search log, the search profile, and the search properties. You can use this information to identify the cause of the error and fix it2. The other options are false because:

Dashboard panels showing “Waiting for queued job to start” on page load is not a problem that can be investigated using the Search Job Inspector, as it indicates that the search job has not started yet. This could be due to the search scheduler being busy or the search priority being low. You can use the Jobs page or the Monitoring Console to monitor the status of the search jobs and adjust the priority or concurrency settings if needed3.

Different users seeing different extracted fields from the same search is not a problem that can be investigated using the Search Job Inspector, as it is related to the user permissions and the knowledge object sharing settings. You can use the Access Controls page or the Knowledge Manager to manage the user roles and the knowledge object visibility4.

Events not being sorted in reverse chronological order is not a problem that can be investigated using the Search Job Inspector, as it is related to the search syntax and the sort command. You can use the Search Manual or the Search Reference to learn how to use the sort command and its options to sort the events by any field or criteria.

When implementing KV Store Collections in a search head cluster, which of the following considerations is true?

Options:

The KV Store Primary coordinates with the search head cluster captain when collection content changes.

The search head cluster captain is also the KV Store Primary when collection content changes.

The KV Store Collection will not allow for changes to content if there are more than 50 search heads in the cluster.

Each search head in the cluster independently updates its KV store collection when collection content changes.

Answer:

BExplanation:

According to the Splunk documentation1, in a search head cluster, the KV Store Primary is the same node as the search head cluster captain. The KV Store Primary is responsible for coordinating the replication of KV Store data across the cluster members. When any node receives a write request, the KV Store delegates the write to the KV Store Primary. The KV Store keeps the reads local, however. This ensures that the KV Store data is consistent and available across the cluster.

Which of the following items are important sizing parameters when architecting a Splunk environment? (select all that apply)

Options:

Number of concurrent users.

Volume of incoming data.

Existence of premium apps.

Number of indexes.

Answer:

A, B, CExplanation:

Number of concurrent users: This is an important factor because it affects the search performance and resource utilization of the Splunk environment. More users mean more concurrent searches, which require more CPU, memory, and disk I/O. The number of concurrent users also determines the search head capacity and the search head clustering configuration12

Volume of incoming data: This is another crucial factor because it affects the indexing performance and storage requirements of the Splunk environment. More data means more indexing throughput, which requires more CPU, memory, and disk I/O. The volume of incoming data also determines the indexer capacity and the indexer clustering configuration13

Existence of premium apps: This is a relevant factor because some premium apps, such as Splunk Enterprise Security and Splunk IT Service Intelligence, have additional requirements and recommendations for the Splunk environment. For example, Splunk Enterprise Security requires a dedicated search head cluster and a minimum of 12 CPU cores per search head. Splunk IT Service Intelligence requires a minimum of 16 CPU cores and 64 GB of RAM per search head45

Which of the following use cases would be made possible by multi-site clustering? (select all that apply)

Options:

Use blockchain technology to audit search activity from geographically dispersed data centers.

Enable a forwarder to send data to multiple indexers.

Greatly reduce WAN traffic by preferentially searching assigned site (search affinity).

Seamlessly route searches to a redundant site in case of a site failure.

Answer:

C, DExplanation:

According to the Splunk documentation1, multi-site clustering is an indexer cluster that spans multiple physical sites, such as data centers. Each site has its own set of peer nodes and search heads. Each site also obeys site-specific replication and search factor rules. The use cases that are made possible by multi-site clustering are:

Greatly reduce WAN traffic by preferentially searching assigned site (search affinity). This means that if you configure each site so that it has both a search head and a full set of searchable data, the search head on each site will limit its searches to local peer nodes. This eliminates any need, under normal conditions, for search heads to access data on other sites, greatly reducing network traffic between sites2.

Seamlessly route searches to a redundant site in case of a site failure. This means that by storing copies of your data at multiple locations, you maintain access to the data if a disaster strikes at one location. Multisite clusters provide site failover capability. If a site goes down, indexing and searching can continue on the remaining sites, without interruption or loss of data2.

The other options are false because:

Use blockchain technology to audit search activity from geographically dispersed data centers. This is not a use case of multi-site clustering, as Splunk does not use blockchain technology to audit search activity. Splunk uses its own internal logs and metrics to monitor and audit search activity3.

Enable a forwarder to send data to multiple indexers. This is not a use case of multi-site clustering, as forwarders can send data to multiple indexers regardless of whether they are in a single-site or multi-site cluster. This is a basic feature of forwarders that allows load balancing and high availability of data ingestion4.

If there is a deployment server with many clients and one deployment client is not updating apps, which of the following should be done first?

Options:

Choose a longer phone home interval for all of the deployment clients.

Increase the number of CPU cores for the deployment server.

Choose a corrective action based on the splunkd. log of the deployment client.

Increase the amount of memory for the deployment server.

Answer:

CExplanation:

The correct action to take first if a deployment client is not updating apps is to choose a corrective action based on the splunkd.log of the deployment client. This log file contains information about the communication between the deployment server and the deployment client, and it can help identify the root cause of the problem1. The other actions may or may not help, depending on the situation, but they are not the first steps to take. Choosing a longer phone home interval may reduce the load on the deployment server, but it will also delay the updates for the deployment clients2. Increasing the number of CPU cores or the amount of memory for the deployment server may improve its performance, but it will not fix the issue if the problem is on the deployment client side3. Therefore, option C is the correct answer, and options A, B, and D are incorrect.

1: Troubleshoot deployment server issues 2: Configure deployment clients 3: Hardware and software requirements for the deployment server

Which search head cluster component is responsible for pushing knowledge bundles to search peers, replicating configuration changes to search head cluster members, and scheduling jobs across the search head cluster?

Options:

Master

Captain

Deployer

Deployment server

Answer:

BExplanation:

The captain is the search head cluster component that is responsible for pushing knowledge bundles to search peers, replicating configuration changes to search head cluster members, and scheduling jobs across the search head cluster. The captain is elected from among the search head cluster members and performs these tasks in addition to serving search requests. The master is the indexer cluster component that is responsible for managing the replication and availability of data across the peer nodes. The deployer is the standalone instance that is responsible for distributing apps and other configurations to the search head cluster members. The deployment server is the instance that is responsible for distributing apps and other configurations to the deployment clients, such as forwarders

Before users can use a KV store, an admin must create a collection. Where is a collection is defined?

Options:

kvstore.conf

collection.conf

collections.conf

kvcollections.conf

Answer:

CExplanation:

A collection is defined in the collections.conf file, which specifies the name, schema, and permissions of the collection. The kvstore.conf file is used to configure the KV store settings, such as the port, SSL, and replication factor. The other two files do not exist1

In an existing Splunk environment, the new index buckets that are created each day are about half the size of the incoming data. Within each bucket, about 30% of the space is used for rawdata and about 70% for index files.

What additional information is needed to calculate the daily disk consumption, per indexer, if indexer clustering is implemented?

Options:

Total daily indexing volume, number of peer nodes, and number of accelerated searches.

Total daily indexing volume, number of peer nodes, replication factor, and search factor.

Total daily indexing volume, replication factor, search factor, and number of search heads.

Replication factor, search factor, number of accelerated searches, and total disk size across cluster.

Answer:

BExplanation:

The additional information that is needed to calculate the daily disk consumption, per indexer, if indexer clustering is implemented, is the total daily indexing volume, the number of peer nodes, the replication factor, and the search factor. These information are required to estimate how much data is ingested, how many copies of raw data and searchable data are maintained, and how many indexers are involved in the cluster. The number of accelerated searches, the number of search heads, and the total disk size across the cluster are not relevant for calculating the daily disk consumption, per indexer. For more information, see [Estimate your storage requirements] in the Splunk documentation.

Which of the following is a way to exclude search artifacts when creating a diag?

Options:

SPLUNK_HOME/bin/splunk diag --exclude

SPLUNK_HOME/bin/splunk diag --debug --refresh

SPLUNK_HOME/bin/splunk diag --disable=dispatch

SPLUNK_HOME/bin/splunk diag --filter-searchstrings

Answer:

AExplanation:

The splunk diag --exclude command is a way to exclude search artifacts when creating a diag. A diag is a diagnostic snapshot of a Splunk instance that contains various logs, configurations, and other information. Search artifacts are temporary files that are generated by search jobs and stored in the dispatch directory. Search artifacts can be excluded from the diag by using the --exclude option and specifying the dispatch directory. The splunk diag --debug --refresh command is a way to create a diag with debug logging enabled and refresh the diag if it already exists. The splunk diag --disable=dispatch command is not a valid command, because the --disable option does not exist. The splunk diag --filter-searchstrings command is a way to filter out sensitive information from the search strings in the diag

Which of the following artifacts are included in a Splunk diag file? (Select all that apply.)

Options:

OS settings.

Internal logs.

Customer data.

Configuration files.

Answer:

B, DExplanation:

The following artifacts are included in a Splunk diag file:

Internal logs. These are the log files that Splunk generates to record its own activities, such as splunkd.log, metrics.log, audit.log, and others. These logs can help troubleshoot Splunk issues and monitor Splunk performance.

Configuration files. These are the files that Splunk uses to configure various aspects of its operation, such as server.conf, indexes.conf, props.conf, transforms.conf, and others. These files can help understand Splunk settings and behavior. The following artifacts are not included in a Splunk diag file:

OS settings. These are the settings of the operating system that Splunk runs on, such as the kernel version, the memory size, the disk space, and others. These settings are not part of the Splunk diag file, but they can be collected separately using the diag --os option.

Customer data. These are the data that Splunk indexes and makes searchable, such as the rawdata and the tsidx files. These data are not part of the Splunk diag file, as they may contain sensitive or confidential information. For more information, see Generate a diagnostic snapshot of your Splunk Enterprise deployment in the Splunk documentation.

(A high-volume source and a low-volume source feed into the same index. Which of the following items best describe the impact of this design choice?)

Options:

Low volume data will improve the compression factor of the high volume data.

Search speed on low volume data will be slower than necessary.

Low volume data may move out of the index based on volume rather than age.

High volume data is optimized by the presence of low volume data.

Answer:

B, CExplanation:

The Splunk Managing Indexes and Storage Documentation explains that when multiple data sources with significantly different ingestion rates share a single index, index bucket management is governed by volume-based rotation, not by source or time. This means that high-volume data causes buckets to fill and roll more quickly, which in turn causes low-volume data to age out prematurely, even if it is relatively recent — hence Option C is correct.

Additionally, because Splunk organizes data within index buckets based on event time and storage characteristics, low-volume data mixed with high-volume data results in inefficient searches for smaller datasets. Queries that target the low-volume source will have to scan through the same large number of buckets containing the high-volume data, leading to slower-than-necessary search performance — Option B.

Compression efficiency (Option A) and performance optimization through data mixing (Option D) are not influenced by mixing volume patterns; these are determined by the event structure and compression algorithm, not source diversity. Splunk best practices recommend separating data sources into different indexes based on usage, volume, and retention requirements to optimize both performance and lifecycle management.

References (Splunk Enterprise Documentation):

• Managing Indexes and Storage – How Splunk Manages Buckets and Data Aging

• Splunk Indexing Performance and Data Organization Best Practices

• Splunk Enterprise Architecture and Data Lifecycle Management

• Best Practices for Data Volume Segregation and Retention Policies

In a four site indexer cluster, which configuration stores two searchable copies at the origin site, one searchable copy at site2, and a total of four searchable copies?

Options:

site_search_factor = origin:2, site1:2, total:4

site_search_factor = origin:2, site2:1, total:4

site_replication_factor = origin:2, site1:2, total:4

site_replication_factor = origin:2, site2:1, total:4

Answer:

BExplanation:

In a four site indexer cluster, the configuration that stores two searchable copies at the origin site, one searchable copy at site2, and a total of four searchable copies is site_search_factor = origin:2, site2:1, total:4. This configuration tells the cluster to maintain two copies of searchable data at the site where the data originates, one copy of searchable data at site2, and a total of four copies of searchable data across all sites. The site_search_factor determines how many copies of searchable data are maintained by the cluster for each site. The site_replication_factor determines how many copies of raw data are maintained by the cluster for each site. For more information, see Configure multisite indexer clusters with server.conf in the Splunk documentation.

(An admin removed and re-added search head cluster (SHC) members as part of patching the operating system. When trying to re-add the first member, a script reverted the SHC member to a previous backup, and the member refuses to join the cluster. What is the best approach to fix the member so that it can re-join?)

Options:

Review splunkd.log for configuration changes preventing the addition of the member.

Delete the [shclustering] stanza in server.conf and restart Splunk.

Force the member add by running splunk edit shcluster-config —force.

Clean the Raft metadata using splunk clean raft.

Answer:

DExplanation:

According to the Splunk Search Head Clustering Troubleshooting Guide, when a Search Head Cluster (SHC) member is reverted from a backup or experiences configuration drift (e.g., an outdated Raft state), it can fail to rejoin the cluster due to inconsistent Raft metadata. The Raft database stores the SHC’s internal consensus and replication state, including knowledge object synchronization, captain election history, and peer membership information.

If this Raft metadata becomes corrupted or outdated (as in the scenario where a node is restored from backup), the recommended and Splunk-supported remediation is to clean the Raft metadata using:

splunk clean raft

This command resets the node’s local Raft state so it can re-synchronize with the current SHC captain and rejoin the cluster cleanly.

The steps generally are:

Stop the affected SHC member.

Run splunk clean raft on that node.

Restart Splunk.

Verify that it successfully rejoins the SHC.

Deleting configuration stanzas or forcing re-addition (Options B and C) can lead to further inconsistency or data loss. Reviewing logs (Option A) helps diagnose issues but does not resolve Raft corruption.

References (Splunk Enterprise Documentation):

• Troubleshooting Raft Metadata Corruption in Search Head Clusters

• splunk clean raft Command Reference

• Search Head Clustering: Recovering from Backup and Membership Failures

• Splunk Enterprise Admin Manual – Raft Consensus and SHC Maintenance

(A customer creates a saved search that runs on a specific interval. Which internal Splunk log should be viewed to determine if the search ran recently?)

Options:

metrics.log

kvstore.log

scheduler.log

btool.log

Answer:

CExplanation:

According to Splunk’s Search Scheduler and Job Management documentation, the scheduler.log file, located within the _internal index, records the execution of scheduled and saved searches. This log provides a detailed record of when each search is triggered, how long it runs, and its success or failure status.

Each time a scheduled search runs (for example, alerts, reports, or summary index searches), an entry is written to scheduler.log with fields such as:

sid (search job ID)

app (application context)

savedsearch_name (name of the saved search)

user (owner)

status (success, skipped, or failed)

run_time and result_count

By searching the _internal index for sourcetype=scheduler (or directly viewing scheduler.log), administrators can confirm whether a specific saved search executed as expected and diagnose skipped or delayed runs due to resource contention or concurrency limits.

Other internal logs serve different purposes:

metrics.log records performance metrics.

kvstore.log tracks KV Store operations.

btool.log does not exist — btool outputs configuration data to the console, not a log file.

Hence, scheduler.log is the definitive and Splunk-documented source for validating scheduled search activity.

References (Splunk Enterprise Documentation):

• Saved Searches and Alerts – Scheduler Operation Details

• scheduler.log Reference – Monitoring Scheduled Search Execution

• Monitoring Console: Search Scheduler Health Dashboard

• Troubleshooting Skipped or Delayed Scheduled Searches

Which search will show all deployment client messages from the client (UF)?

Options:

index=_audit component=DC* host=

index=_audit component=DC* host=

index=_internal component= DC* host=

index=_internal component=DS* host=

Answer:

CExplanation:

The index=_internal component=DC* host=

New data has been added to a monitor input file. However, searches only show older data.

Which splunkd. log channel would help troubleshoot this issue?

Options:

Modularlnputs

TailingProcessor

ChunkedLBProcessor

ArchiveProcessor

Answer:

BExplanation:

The TailingProcessor channel in the splunkd.log file would help troubleshoot this issue, because it contains information about the files that Splunk monitors and indexes, such as the file path, size, modification time, and CRC checksum. It also logs any errors or warnings that occur during the file monitoring process, such as permission issues, file rotation, or file truncation. The TailingProcessor channel can help identify if Splunk is reading the new data from the monitor input file or not, and what might be causing the problem. Option B is the correct answer. Option A is incorrect because the ModularInputs channel logs information about the modular inputs that Splunk uses to collect data from external sources, such as scripts, APIs, or custom applications. It does not log information about the monitor input file. Option C is incorrect because the ChunkedLBProcessor channel logs information about the load balancing process that Splunk uses to distribute data among multiple indexers. It does not log information about the monitor input file. Option D is incorrect because the ArchiveProcessor channel logs information about the archive process that Splunk uses to move data from the hot/warm buckets to the cold/frozen buckets. It does not log information about the monitor input file12

1: https://docs.splunk.com/Documentation/Splunk/9.1.2/Troubleshooting/WhatSplunklogsaboutitself#splunkd.log 2: https://docs.splunk.com/Documentation/Splunk/9.1.2/Troubleshooting/Didyouloseyourfishbucket#Check_the_splunkd.log_file

When should a Universal Forwarder be used instead of a Heavy Forwarder?

Options:

When most of the data requires masking.

When there is a high-velocity data source.

When data comes directly from a database server.

When a modular input is needed.

Answer:

BExplanation:

According to the Splunk blog1, the Universal Forwarder is ideal for collecting data from high-velocity data sources, such as a syslog server, due to its smaller footprint and faster performance. The Universal Forwarder performs minimal processing and sends raw or unparsed data to the indexers, reducing the network traffic and the load on the forwarders. The other options are false because:

When most of the data requires masking, a Heavy Forwarder is needed, as it can perform advanced filtering and data transformation before forwarding the data2.

When data comes directly from a database server, a Heavy Forwarder is needed, as it can run modular inputs such as DB Connect to collect data from various databases2.

When a modular input is needed, a Heavy Forwarder is needed, as the Universal Forwarder does not include a bundled version of Python, which is required for most modular inputs2.

An index has large text log entries with many unique terms in the raw data. Other than the raw data, which index components will take the most space?

Options:

Index files (*. tsidx files).

Bloom filters (bloomfilter files).

Index source metadata (sources.data files).

Index sourcetype metadata (SourceTypes. data files).

Answer:

AExplanation:

Index files (. tsidx files) are the main components of an index that store the raw data and the inverted index of terms. They take the most space in an index, especially if the raw data has many unique terms that increase the size of the inverted index. Bloom filters, source metadata, and sourcetype metadata are much smaller in comparison and do not depend on the number of unique terms in the raw data.

In splunkd. log events written to the _internal index, which field identifies the specific log channel?

Options:

component

source

sourcetype

channel

Answer:

DExplanation:

In the context of splunkd.log events written to the _internal index, the field that identifies the specific log channel is the "channel" field. This information is confirmed by the Splunk Common Information Model (CIM) documentation, where "channel" is listed as a field name associated with Splunk Audit Logs.

When using the props.conf LINE_BREAKER attribute to delimit multi-line events, the SHOULD_LINEMERGE attribute should be set to what?

Options:

Auto

None

True

False

Answer:

DExplanation:

When using the props.conf LINE_BREAKER attribute to delimit multi-line events, the SHOULD_LINEMERGE attribute should be set to false. This tells Splunk not to merge events that have been broken by the LINE_BREAKER. Setting the SHOULD_LINEMERGE attribute to true, auto, or none will cause Splunk to ignore the LINE_BREAKER and merge events based on other criteria. For more information, see Configure event line breaking in the Splunk documentation.

A search head has successfully joined a single site indexer cluster. Which command is used to configure the same search head to join another indexer cluster?

Options:

splunk add cluster-config

splunk add cluster-master

splunk edit cluster-config

splunk edit cluster-master

Answer:

BExplanation:

The splunk add cluster-master command is used to configure the same search head to join another indexer cluster. A search head can search multiple indexer clusters by adding multiple cluster-master entries in its server.conf file. The splunk add cluster-master command can be used to add a new cluster-master entry to the server.conf file, by specifying the host name and port number of the master node of the other indexer cluster. The splunk add cluster-config command is used to configure the search head to join the first indexer cluster, not the second one. The splunk edit cluster-config command is used to edit the existing cluster configuration of the search head, not to add a new one. The splunk edit cluster-master command does not exist, and it is not a valid command.

(A customer wishes to keep costs to a minimum, while still implementing Search Head Clustering (SHC). What are the minimum supported architecture standards?)

Options:

Three Search Heads and One SHC Deployer

Two Search Heads with the SHC Deployer being hosted on one of the Search Heads

Three Search Heads but using a Deployment Server instead of a SHC Deployer

Two Search Heads, with the SHC Deployer being on the Deployment Server

Answer:

AExplanation:

Splunk Enterprise officially requires a minimum of three search heads and one deployer for a supported Search Head Cluster (SHC) configuration. This ensures both high availability and data consistency within the cluster.

The Splunk documentation explains that a search head cluster uses RAFT-based consensus to elect a captain responsible for managing configuration replication, scheduling, and user workload distribution. The RAFT protocol requires a quorum of members to maintain consistency. In practical terms, this means a minimum of three members (search heads) to achieve fault tolerance — allowing one member to fail while maintaining operational stability.

The deployer is a separate Splunk instance responsible for distributing configuration bundles (apps, settings, and user configurations) to all members of the search head cluster. The deployer is not part of the SHC itself but is mandatory for its proper management.

Running with fewer than three search heads or replacing the deployer with a Deployment Server (as in Options B, C, or D) is unsupported and violates Splunk best practices for SHC resiliency and management.

References (Splunk Enterprise Documentation):

• Search Head Clustering Overview – Minimum Supported Architecture

• Deploy and Configure the Deployer for a Search Head Cluster

• High Availability and Fault Tolerance with RAFT in SHC

The master node distributes configuration bundles to peer nodes. Which directory peer nodes receive the bundles?

Options:

apps

deployment-apps

slave-apps

master-apps

Answer:

CExplanation: